More Than Touch: Understanding How People Use Skin as an Input Surface for Mobile Computing

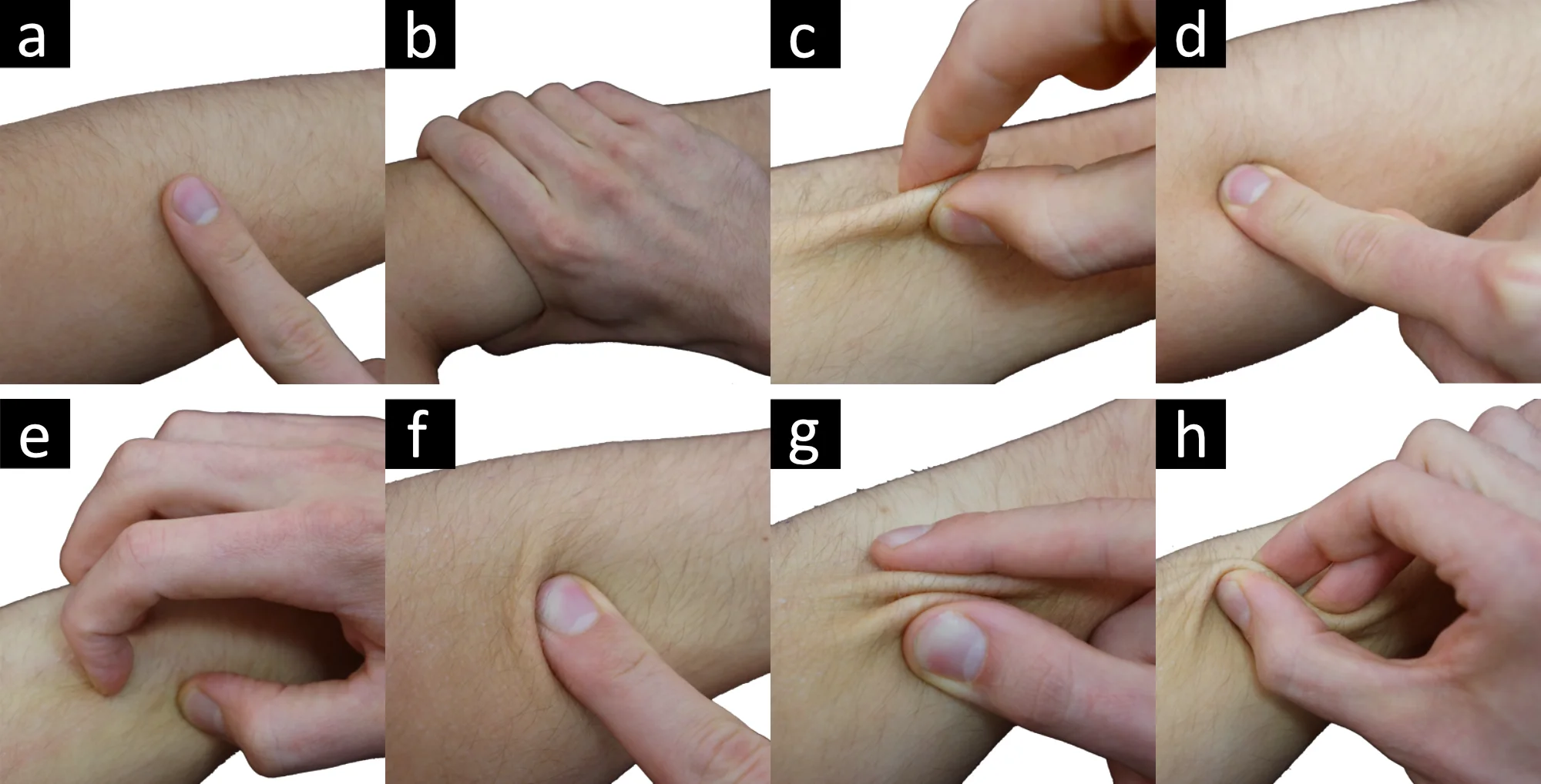

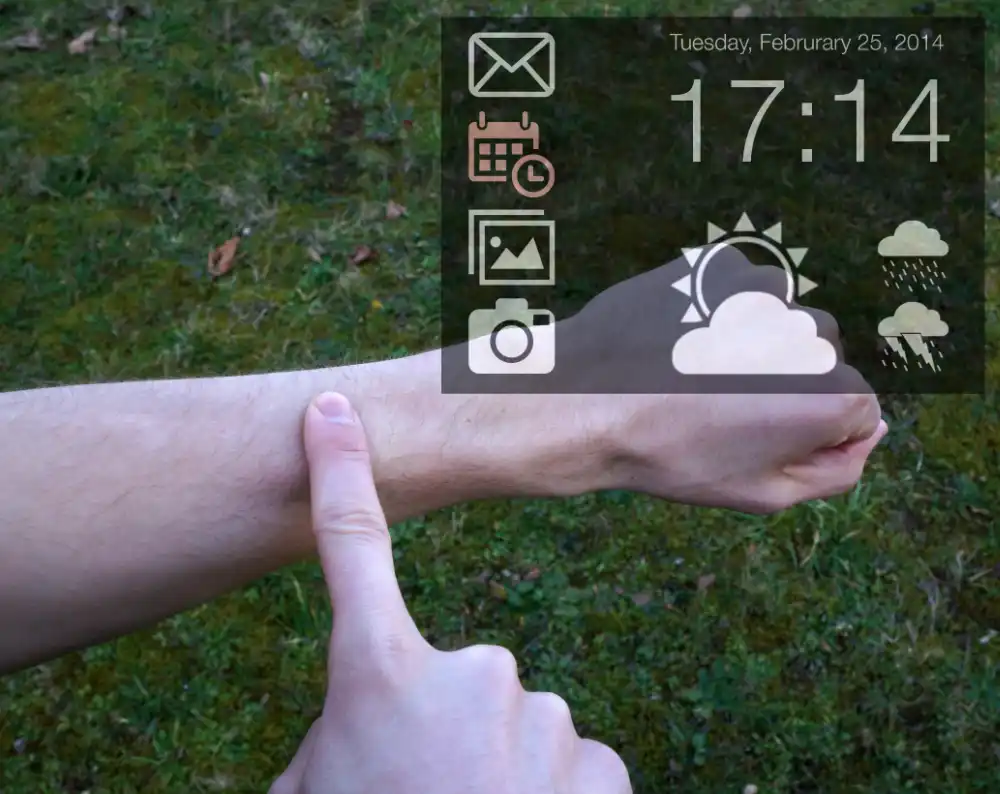

This paper contributes results from an empirical study of on-skin input, an emerging technique for controlling mobile devices. Skin is fundamentally different from off-body touch surfaces, opening up a new and largely unexplored interaction space. We investigate characteristics of the various skin-specific input modalities, analyze what kinds of gestures are performed on skin, and study what are preferred input locations. Our main findings show that (1) users intuitively leverage the properties of skin for a wide range of more expressive commands than on conventional touch surfaces; (2) established multi-touch gestures can be transferred to on-skin input; (3) physically uncomfortable modalities are deliberately used for irreversible commands and expressing negative emotions; and (4) the forearm and the hand are the most preferred locations on the upper limb for on-skin input. We detail on users’ mental models and contribute a first consolidated set of on-skin gestures. Our findings provide guidance for developers of future sensors as well as for designers of future applications of on-skin input.

Video

Photos

Publication

Poster